Real time user interface production with generative AI hints at radical changes to UX design and accessibility

This post contains a lot of questions and very few answers, I hope you enjoy it regardless and it triggers some thoughts.

Back in March, OpenAI demonstrated their newest model “GPT-4V” (the V is for Vision) on a dev stream:

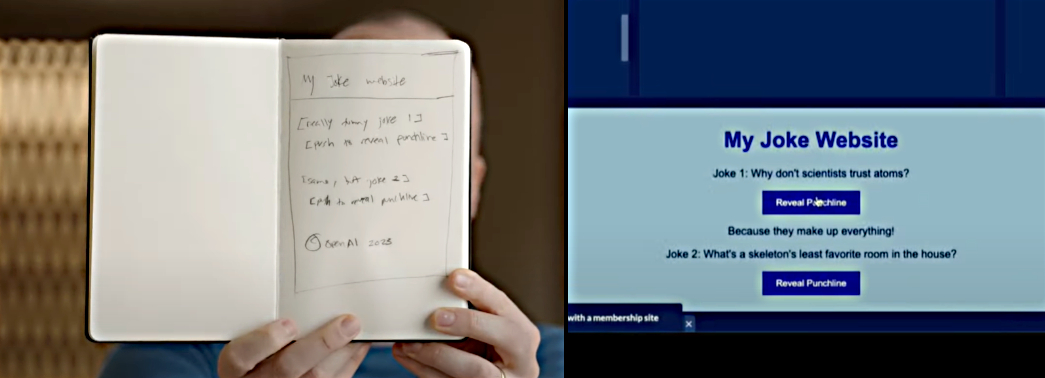

In this YouTube video at 16:19, Greg Brockman demonstrates turning a rough sketch of a website into a working prototype, almost instantly.

In this example GPT-4V not only interpreted the image, but went on to write the HTML, CSS, and JavaScript for interactivity. It also wrote the jokes and punchlines (although the jokes aren’t great)! GPT-4V used a heading, as well as body copy, and styled the buttons to appear as clickable elements.

Is it a huge leap to imagine a model in years (or more likely, months) from now that can generate User Interface, on the fly, in real-time?

Imagine: an advanced digital experience layer understands the visitor and prompts a generative AI model with all the interface requirements, their methods, and something like:

“The user is a 30 year old IT professional, who has used the system 459 times previously, accessing at 2am local time from a mobile device, use only our design system to build the most suitable UI for the server management list view. Customize the action buttons for the user’s most likely task.”

The front end code is generated and displayed - and it’s exactly what this user needs right now;

- Tailored to their device viewport and interaction method

- Tailored to the likely task they want to perform

- Customized to the user’s preferences

- Streamlined and optimized for their familiarity and experience level

- Using the in-house design system to perfectly match the existing look and feel

This imagined approach makes today’s personalization engines look like rudimentary templates.

How soon will we be using AI-generated front-end user interfaces?

How does this change user experience?

As imagined, this kind of approach has the potential to massively improve user experience by providing a better-optimized user interface to each user.

Discoverability, usability and accessibility can all be enhanced by showing the user the right views, controls and flows at the right time - customized to their context.

There are challenges too: how does help and support work if every experience is different? How do we ensure that design ethics are followed and the AI doesn’t employ deceptive patterns to manipulate users?

How does this change design?

Design Systems become more vital than ever before.

Organizations with a robust, scalable design system comprising of a pattern library and front-end code components will be first in line to take advantage of this imagined technology.

What are the designers designing? It’s no longer about laying out views, but instead about prompt engineering, design systems, and laying out the rules the AI must follow to produce the best interfaces.

We need a new framework or language to describe the front-end. Just as HTML and CSS separated structure and presentation, we need a new markup for “intent” or “abilities” in which the UI’s elements, methods and properties are defined in a declarative way - for the AI to assemble into a front-end dynamically.

How do we measure and evaluate experience metrics if every experience is different? A new breed of user experience analytics will be needed to work with these dynamic front-ends where simple flow and drop-off metrics won’t be as useful.

How does this change accessibility?

According to the WebAIM Million (2023), 96% of the top 1M pages on the web have basic accessibility errors, so we’re not exactly excelling so far in producing accessible front-end code.

Does generative AI produce accessible front-end experiences? It’s too soon to know, but we should show responsibility by building in checks for accessibility and training models on accessible code and accessibility guidelines. This is a huge opportunity to ensure that AI generated front-end code is accessible by default.

Will this make every website and application a dystopian nightmare?

Possibly. If design ethics and accessibility are ignored, the web would become a more manipulative place which excludes more people.

On the other hand, it has the potential to revolutionize access to systems - reduce learning curves, increase efficiency and truly allow access for all.

How likely is this to happen?

If experimentation with AI-generated UI is shown to predictably deliver greater user satisfaction, greater retention, or higher revenues for commercial entities: it’s a certainty.