On Artificial Intelligence in User Experience Design

Or, why you won’t be replaced by a robot (yet).

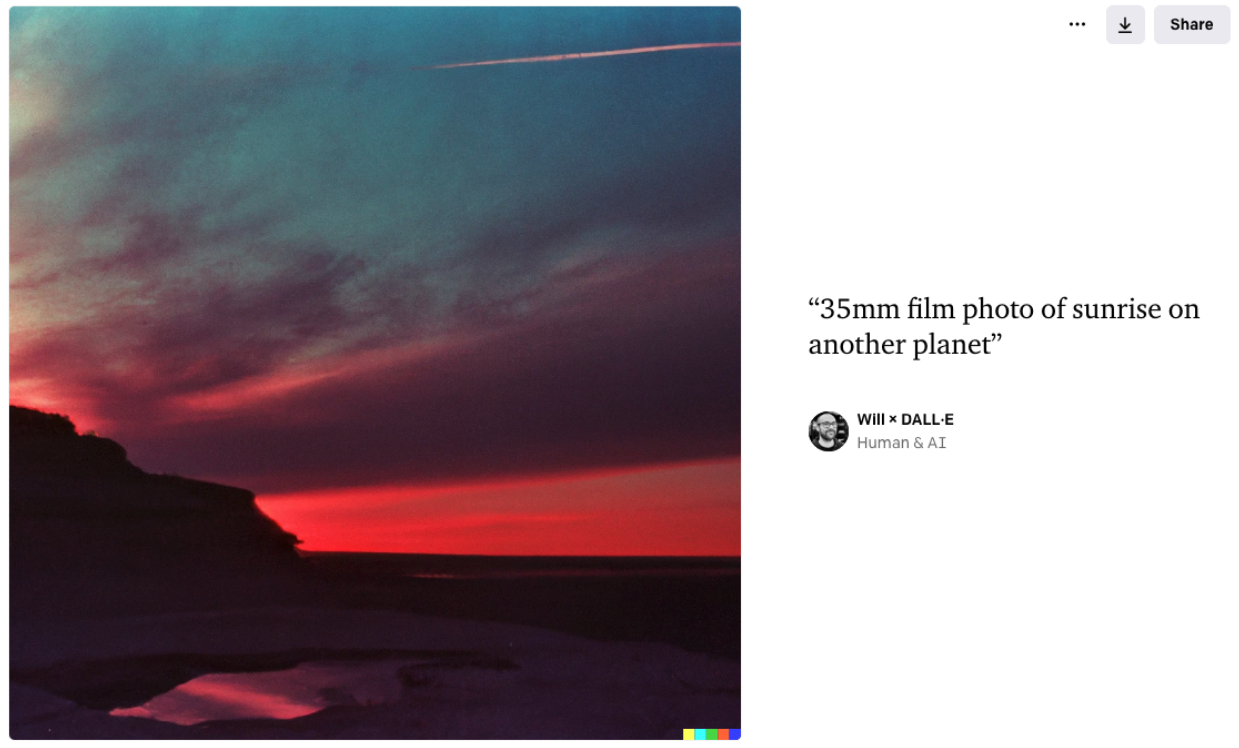

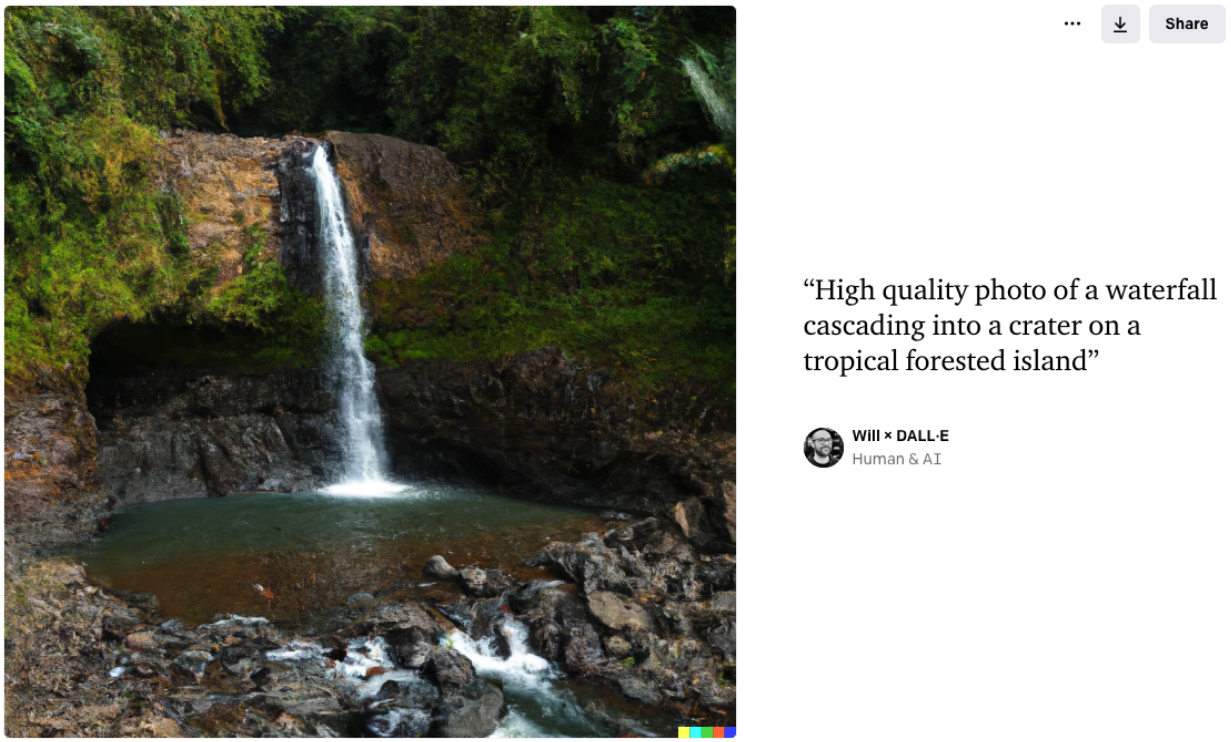

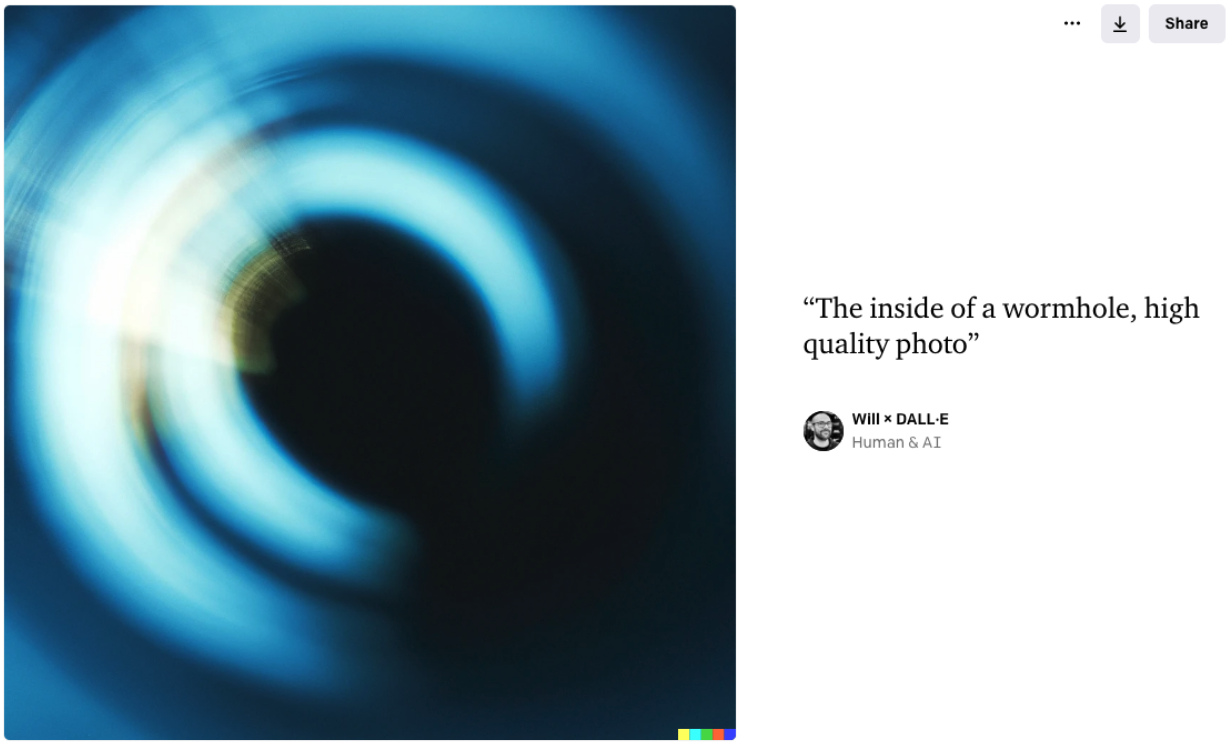

DALL-E 2, Stable Diffusion and GauGAN are some examples of cutting-edge AI systems that use advanced machine learning to generate realistic imagery from a text prompt. I’ve been playing with all three over the past months and some of the results you can generate are breathtaking.

There’s an analogy to Wikipedia here: because these models were trained on billions of human-created images, they inherit the “sum total of human knowledge” - like Wikipedia. Knowledge on what makes an attractive image. Colours, lighting and composition are selected from the images we all collectively thought were good enough to keep and not simply delete.

It’s why the waterfall isn’t upside down - we already deleted all those images, so the AI only knows the best of what we have.

Then as we publish these AI-generated images to the web, they go back into the pool of images in the world for training the next generation of AI models.

So what would happen if the AI was trained on the best wireframes, the best Figma mockups and the best user interface prototypes? Billions of them?

What happens if you get an AI to draw your wireframes for you?

Hmmmm. I’m not going to be submitting these to design peer review just yet.

But it’s easy to imagine the direction this is going in.

“Most people overestimate what they can achieve in a year and underestimate what they can achieve in ten years.” Bill Gates

Fidelity will improve. Models trained only on user interface images will be better at drawing them, they’ll learn from convention and produce the best design for a situation about 80% of the time - and will need a bit of a tweak for the last 20%.

Speed will improve. Training models and running the rendering currently takes a long time, but - like everything in tech - the computing power and software efficiency will improve over time until designs take a couple of seconds to produce, or are even produced in real-time as you work.

Editability will be possible. Instead of just flat bitmaps, we’ll be given vector based components that can be edited and moved around.

“User interface for a teleporter”

Imagine an extension to Figma that uses your existing design system, placing the components into views based on AI prompts - it won’t be perfect, but it’ll get you 80% of the way there.

“Ok Figma, a mobile app register view with forgot password and option to sign in existing users”

Your job as a UX designer doesn’t change - you still have to understand the problems to be solved and come up with solutions. The difference is: you won’t need to draw the solutions out by hand - an AI will help you get there, giving you more time to think about users, solving problems and building out a robust design system.

“Users prefer your site to work the same way as all the other sites they already know” Jakob Nielsen

Should you really be designing your user interface from scratch every time? Of course not. If the AI is already trained on a billion images of the best user interfaces out there, why waste time reinventing the wheel?